Motion capture, or MoCap, is the process of recording the movements of real humans or objects using specialized technology and transferring that data to digital models for use in films, animation, or video games. In this process, sensors or specialized cameras are placed on an actor’s body or on objects to accurately capture their motion. The recorded motion data is then transferred to a digital model, creating realistic animated movement.

In film and animation, motion capture is especially valuable for producing natural, lifelike motion for digital characters or non-human creatures such as fictional or animated beings.

Benefits and Applications

Motion capture allows complex movements and subtle human body details to be recorded quickly and automatically applied to digital models.

In the video game industry, MoCap is used to create realistic character movements and interactions, ensuring that actions, reactions, and expressions are simulated accurately.

This technology significantly accelerates production while enhancing realism, making it essential for projects that require authentic human motion particularly in films and video games.

Body vs. Facial Motion Capture

In MoCap, body and facial movements are typically recorded separately, each with distinct workflows and applications.

These two components can be captured using different techniques, each serving specific purposes, which will be discussed in detail in this series.

In many projects especially complex animated films or video games combining body and facial motion capture simultaneously is crucial for delivering a richer, more natural experience.

Topics Covered in This Series

In this article, we explore a wide range of key topics related to motion capture (MoCap), including:

-

Applications of Motion Capture in animation and visual effects (VFX).

-

Differences in MoCap workflows for VFX, animation, and video games.

-

Types of Motion Capture by technical and hardware setup, along with their advantages, disadvantages, and areas of application.

-

Digital Actors – understanding the concept of a motion capture actor and how it differs from traditional actors.

-

Stunt Motion Capture and Stunt Performers – the distinctions between MoCap actors and stunt performers.

-

Full-Body Motion Capture – processes and stages, including optical tracking systems, camera setup, optical markers, motion recording, data processing, refinement, testing, and final output integration.

-

Facial Motion Capture – data analysis, face motion simulation, transferring facial motion to digital models, emotion and expression analysis, adding fine details, data cleanup, and optimization.

Why Motion Capture Is Important

Motion capture has gained special significance due to its widespread applications and profound impact on digital content creation, films, video games, and even other industries such as medical and sports.

Learning MoCap not only helps individuals improve the quality of their projects but also prepares them for emerging career opportunities in creative and technological fields.

Career Opportunities in Creative and Tech Industries

With the growth of industries such as filmmaking, animation, game development, and emerging sectors like virtual reality (VR) and augmented reality (AR), the demand for skilled motion capture professionals is rising.

Mastering this technology can open new career pathways for anyone looking to work in these fields, making MoCap expertise a vital skill for those aiming to succeed in the digital and creative industries.

Advancing Artistic and Technical Careers

Combining art and technology in motion capture is a unique skill that can elevate your creative abilities to a whole new level.

Learning how to use this technology enables animators, character designers, and filmmakers to create more precise and engaging works.

Familiarity with Advanced Tools and Techniques

Studying motion capture gives you access to advanced tools and software such as Vicon, OptiTrack, MotionBuilder, and others.

These tools are not only essential for digital content creation but can also be applied to other technical projects.

Enhancing Project Quality

Motion capture specialists can significantly improve the quality of large-scale projects.

Since this technology directly impacts animation realism and visual effects, mastering it can lead to higher-quality outputs and greater project success.

The Origins of Motion Capture

Motion capture, while a relatively modern technology in animation, filmmaking, and video games, has roots that go back decades.

Here is a brief history of the invention and early use of this technology:

Early Beginnings: The 1930s

The foundations of modern motion capture trace back to the early 20th century.

Animators, including Walt Disney, experimented with techniques like rotoscoping observing and recording real human motion to simulate character movements.

Actors were filmed performing actions, which animators then copied frame by frame onto their drawings.

1930s–1940s: Laying the Theoretical Foundations

During this period, Robert H. Hessley and other biomechanics researchers studied human motion scientifically, attempting to develop principles for animating realistic movement in 2D animation.

These efforts laid the groundwork for future motion capture technology.

Technological Advances in the 1960s and 1970s

In these decades, cameras and sensors became increasingly utilized to record human motion.

Researchers and engineers at universities and research institutions began developing systems capable of capturing motion and converting it into digital data.

1970s: The First Digital Motion Capture Efforts

The 1970s saw the first attempts to digitally record human motion.

Significant advancements were made by Kingsley and Ralph Culbrook, who developed some of the earliest optical motion capture systems, using cameras to track real human movements.

These systems enabled researchers to analyze and simulate complex human motion accurately.

Entering the Film and Animation Industry (1980s)

The first commercial uses of motion capture began in the 1980s within the animation and filmmaking industry.

Studios such as ILM (Industrial Light & Magic) and Walt Disney Studios gradually adopted MoCap technology to enhance animation and visual effects.

One of the earliest notable projects was the animated film The Adventures of the Little Mermaid, which utilized motion capture to record facial expressions and body movements for animated characters.

1980s–1990s:

During these decades, motion capture technologies advanced significantly, and their use expanded in filmmaking and computer animation.

Companies such as Vicon and Motion Analysis began developing and providing professional motion capture systems.

Early Major Uses in Film and Video Games (1990s)

By the 1990s, motion capture had become a core technology in the film and video game industries.

A major development during this period was the use of MoCap for 3D animation.

One of the first large-scale projects to employ motion capture was Star Wars: Episode I – The Phantom Menace (1999), which used MoCap technology to animate digital characters.

During the same decade, video games also began using motion capture to simulate realistic human movements.

For example, Leo Trochi and his team at EA Sports in the late 1990s used MoCap to record actor movements and translate them into in-game character animations.

Motion Capture in the Modern Era (2000s–Present)

From the 2000s onward, motion capture became an essential tool in producing 3D films and video games.

Movies such as Avatar (2009) used motion capture to create realistic and natural character movements, employing specialized cameras to capture actors’ performances in fully digital 3D environments.

Expansion into the Video Game Industry

Today, MoCap systems are widely used in video games to create lifelike characters and record complex in-game movements.

Games like Red Dead Redemption 2 and The Last of Us demonstrate how motion capture enhances both animation quality and visual realism, significantly elevating the overall gaming experience.

Summary of Motion Capture History

Early developments in motion capture date back to the 1930s and 1940s, when animators and researchers attempted to simulate human movement for 2D animation.

The first commercial applications of the technology emerged in the 1980s within animation and filmmaking. By the 1990s, motion capture had become a core technology for cinema and video game production.

From the 2000s onward, MoCap technology saw widespread adoption in film, video games, and 3D animation. Today, motion capture is one of the primary tools for creating realistic animations and visual effects, making it an essential component in cinematic and video game production.

Applications of Motion Capture in Animation and Visual Effects

Motion capture (MoCap) is widely used in the visual effects (VFX) industry to create natural and realistic animations of actors or digital creatures.

This technology allows for precise recording of movement and transferring it to digital models, ultimately producing more believable and lifelike visual effects.

Key applications of motion capture in VFX include:

1. Creating Digital Creatures and Realistic Characters

Motion capture is frequently used to record the precise movements of digital characters, including:

-

Fictional or digital creatures: MoCap captures movements from actors or models and applies them to digital creatures such as dinosaurs, animals, or mythical beings to achieve natural motion.

-

Accurate character animation: In films with digital characters, such as sci-fi or fantasy movies, MoCap is used to create complex, realistic movements.

2. Integrating Live Actors with Digital Effects

Motion capture helps blend live-action performances with digital environments or objects:

-

High-end VFX films: In movies like Avatar or Planet of the Apes, actors perform motions captured with MoCap, which are then transferred to digital characters or creatures.

-

Performance in digital worlds: Actors wear specialized MoCap suits, and their movements are digitally mapped into virtual worlds, allowing digital characters to move naturally.

3. Creating Visual Effects for Action Scenes

MoCap is crucial for fast-paced or complex action sequences, helping to produce realistic movements:

-

Fighting or jumping motions: In films with combat or rapid movements, MoCap records actors’ performances and transfers them to digital characters, resulting in smoother, more detailed action scenes.

-

Complex body movements: For characters moving in fully digital 3D environments, MoCap accurately captures real human movements and applies them to digital models.

Facial Movements and Emotional Reactions

Motion capture is not only used for recording body movements but also for capturing facial expressions and emotional reactions. This aspect is crucial for creating characters with realistic and expressive emotions.

Facial Movements:

Using motion capture technology, subtle facial movements such as blinking, lip changes, eyebrow shifts, and micro-expressions can be recorded. These data are then applied to digital models, allowing characters to react more naturally.

Creating Realistic Emotions:

This enables digital characters, particularly in animation or sci-fi films, to accurately convey human-like emotions and responses.

Enhanced Realism and Precision:

MoCap ensures that movements and interactions in VFX films appear more lifelike. This technology is particularly useful for scenes that require complex and precise movements.

Benefits:

-

Natural visual effects: Instead of manually animating complex motions, MoCap allows movements to be automatically and accurately transferred, making visual effects more realistic.

-

Time and resource efficiency: Motion capture reduces the time required for animation while delivering highly precise and quality results.

Differences in Motion Capture Applications: VFX, Animation, and Games

Although motion capture (MoCap) is used across VFX, animation, and video games, its application and objectives differ slightly in each field. The underlying technology is similar, but the requirements and workflows vary.

Motion Capture in VFX:

In the VFX industry, MoCap is primarily used to create natural and realistic visual effects. The goal is to transfer real human or creature movements into the digital world and integrate them with fully digital environments or hybrid live-action/digital scenes.

Key applications in VFX include:

-

Creating digital characters: Real actor movements are captured to animate digital characters, such as mythical creatures or animals.

-

Integrating actors with digital environments: Actors’ performances are recorded and applied to digital characters in virtual settings.

-

Facial movements and emotional reactions: Motion capture is used to accurately represent natural facial expressions and emotional responses in digital characters.

Motion Capture in Animation

In animation, motion capture is used to create more natural and realistic movements for digital characters and creatures. Unlike VFX, which focuses on visual effects and integrating real-world footage with digital elements, animation emphasizes storytelling and character development.

Key applications in animation include:

-

Accurate character movements: MoCap is used to record precise movements for digital characters.

-

Enhanced realism: It helps animators depict complex human or animal movements more accurately and naturally.

Motion Capture in Video Games

In the video game industry, motion capture is used to create natural and interactive movements within games. Unlike VFX and animation, which mainly focus on pre-rendered visual content, game development emphasizes dynamic interactions and real-time player responses.

Main applications in games include:

-

Realistic character movements: MoCap is applied to animate player-controlled characters and NPCs (non-player characters), including actions like running, walking, jumping, and other interactions.

-

Enhanced gameplay depth: Motion capture allows for complex interactions between characters and the game world, creating more immersive experiences.

-

Use in realistic simulations: Sports games or driving simulators use MoCap to generate precise and lifelike motions.

Goals and Objectives Across VFX, Animation, and Games

-

VFX: The primary goal is to create realistic visual effects and integrate them with digital environments.

-

Animation: The focus is on telling engaging stories and bringing characters to life using natural movements.

-

Video Games: The aim is to deliver interactive and realistic experiences for players.

Flexibility for dynamic changes:

In video games, motion capture must react to player input in real time, allowing character movements to change dynamically and continuously. In contrast, in VFX and animation, movements are usually pre-recorded and fixed.

Real-time interactions:

In games, MoCap-driven movements often occur live in response to the player, whereas in films and animation, character movements are mostly pre-recorded with limited adjustments.

Summary:

While the core principles of motion capture are similar across VFX, animation, and gaming, the applications differ according to the needs of each industry. VFX focuses on visual effects and blending reality with digital environments, animation focuses on creating natural and engaging digital characters, and video games focus on producing interactive, lifelike experiences for players.

Types of Motion Capture: Technical and Hardware Perspectives

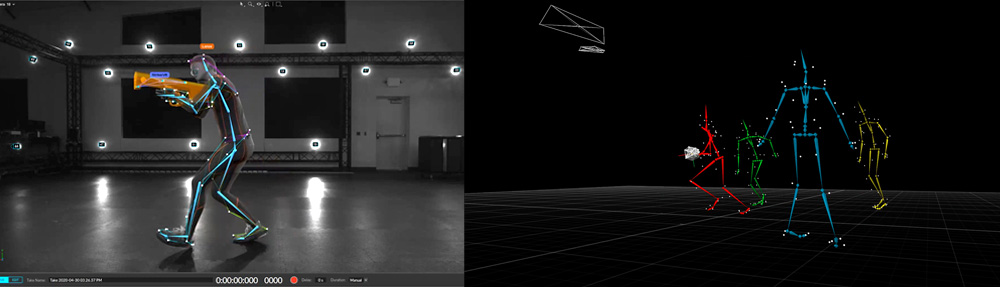

1. Optical Motion Capture

Optical Motion Capture is one of the most common techniques in the VFX and animation industry, used to record the movements of humans or objects in the real world. This method relies on specialized cameras and optical systems to capture high-quality and precise motion data for use in digital animation or visual effects.

Process of Optical Motion Capture:

-

Attaching Markers:

Small markers, usually spherical and colored or reflective, are attached to the actor’s body or other models. These markers are typically placed on key points such as joints and other significant locations on the body. Reflective materials are used so that the markers can efficiently reflect light. -

Using Optical Cameras:

Multiple optical cameras are positioned around the actor or object from different angles. These cameras are capable of detecting and recording the exact position of each reflective marker. -

Tracking and Calculating Positions:

The cameras, along with their optical tracking systems, capture the precise location of each marker. This information is then transmitted to motion capture software, which calculates the three-dimensional positions of the markers and extracts the motion data. -

Analyzing and Transferring Data:

The captured data is processed in specialized software using advanced algorithms to model the actor’s movements and convert them into digital motion data. This data can then be used to create realistic animations or adjust movements for visual effects.

Advantages and Challenges of Optical Motion Capture

Advantages:

-

High Accuracy: This method is capable of capturing movements with very high precision.

-

Non-Contact: Unlike wired motion capture systems, there is no physical contact between the actor and the equipment.

-

Flexibility in Positioning: Cameras can capture motion from multiple angles, making it easier to record complex movements.

Challenges:

-

Large Space Requirement: Optical systems need a spacious area for proper camera placement and accurate motion capture.

-

Environmental Limitations: This technique depends on adequate lighting conditions and may perform poorly in low-light or cluttered environments.

-

High Cost: Installing optical motion capture systems can be expensive due to the need for advanced cameras and specialized equipment.

Applications:

Optical motion capture is widely used in feature films, video games, 3D animation, and virtual/augmented reality development.

2. Inertial Motion Capture

Inertial Motion Capture is a modern and widely used technique in the VFX and animation industry that captures human or object movements without relying on optical cameras. This method utilizes inertial sensors such as accelerometers and gyroscopes to track motion in 3D space.

Process of Inertial Motion Capture:

-

Sensor Attachment:

Inertial sensors (accelerometers and gyroscopes) are attached to the actor’s body or a specialized motion capture suit. These sensors can detect 3D movement and orientation, providing detailed data on motion. -

Motion Measurement:

The sensors continuously record acceleration and rotational changes of the body or body parts. This includes movements of arms, legs, head, and other body segments. -

Data Transmission to Software:

Sensors are usually connected to a central unit that transmits the captured data wirelessly or via cable to motion capture software. The software then processes the data to accurately simulate body movements and translate them into digital models. -

Modeling and Data Processing:

The motion capture software combines sensor data using specialized algorithms to precisely replicate movement. The processed data is then ready for use in 3D animation or visual effects production.

Advantages of Inertial Motion Capture

-

Usable Anywhere: Unlike optical motion capture systems that require dedicated spaces and multiple cameras, sensor-based systems can be used in any environment, including open spaces or areas without specialized lighting.

-

Portability: Sensors are lightweight and portable, making them easy to attach to the actor’s body or a specialized suit. This is especially useful for projects that require natural movements in diverse environments.

-

Lower Cost: Sensor-based systems are generally more affordable than optical systems, making them suitable for smaller or independent projects.

-

Flexibility for Complex Movements: High-precision sensors allow for accurate capture of dynamic and complex motions such as jumps, running, fast rotations, and other dynamic actions.

Challenges and Limitations:

-

Lower Accuracy Compared to Optical Systems: While portable and flexible, sensor-based systems may be less precise in capturing fine details of motion.

-

Difficulty Capturing Very Fine Movements: For extremely subtle or intricate movements, sensor-based systems may have limitations.

-

Need for Precise Calibration: Sensors require careful calibration to minimize potential errors in motion data.

-

Limitations in Complex Interactions: If the actor interacts with objects (e.g., using props or weapons), sensor-based systems may struggle to accurately simulate these interactions.

Applications:

-

Film and Television: Capturing actor movements for realistic animation.

-

Video Games: Animating digital characters and natural movements in 3D environments.

-

Sports and Medicine: Analyzing athletes’ or patients’ movements for training or therapy.

-

Virtual Reality (VR) and Augmented Reality (AR): Simulating users’ body movements in immersive environments.

In general, inertial motion capture is ideal for projects that require flexibility and portability rather than extreme precision, and it is widely used in gaming and 3D animation industries.

3. Markerless Motion Capture

Markerless Motion Capture is one of the latest and most advanced motion capture techniques, which captures body movements without using physical markers. In this method, motions are directly recorded through software and video/image processing, eliminating the need for special cameras or wearable sensors. It typically uses computer vision algorithms, deep learning, and image processing to simulate movements.

Process of Markerless Motion Capture:

-

Recording Images or Videos:

One or more ordinary cameras (DSLRs, video cameras, or even mobile cameras) record the actor or object from multiple angles to obtain a complete and accurate representation of movements. -

Image Analysis and Processing:

Specialized software uses computer vision algorithms to detect and track the human body or objects. These algorithms identify body parts such as the head, hands, feet, and joints, and track their positions across frames. -

Simulation and Motion Data Extraction:

Once the body parts are detected, the extracted motion data is converted into a 3D digital model. The software processes the tracked data to generate realistic digital movements. -

Refinement and Optimization:

Machine learning and statistical models are often applied to improve accuracy and realism. If necessary, manual adjustments are made to ensure movements are as natural and precise as possible.

Advantages of Markerless Motion Capture

-

No Physical Equipment Required: The biggest advantage of this method is that it does not require physical markers or specialized sensors. This makes the setup and use of the system much simpler and more convenient.

-

High Flexibility: Since no physical markers are needed, markerless motion capture can be used in open, natural, or unrestricted environments. Actors can move freely without worrying about space constraints.

-

Simpler and More Affordable: Because it eliminates the need for complex and expensive equipment (such as sensors, optical cameras, or specialized suits), this technique is ideal for smaller or lower-budget projects.

-

Reduced Human Error: Automatic image processing and AI algorithms help minimize errors in motion data capture.

Challenges and Limitations

-

Lower Accuracy Compared to Marker-Based Systems: Since this system relies on image processing and motion simulation, it may be less precise than marker-based systems, especially when the image quality is poor or distinguishing body parts is difficult (e.g., in crowded or dark environments).

-

High Computational Requirements: Automatic image processing and precise motion analysis require significant computing power, which can slow down processing and demand high-performance hardware.

-

Image Resolution Limitations: The accuracy of the results heavily depends on the quality and resolution of the captured footage. Low-resolution images may lead to less precise motion data.

-

Limitations in Simulating Complex Movements: For highly complex movements or detailed actions (like loose clothing or intricate hand motions), computer vision algorithms may struggle to accurately replicate these motions.

Applications

-

Films and Animation: Used in the production of movies and 3D animations to simulate actor movements and digital characters without additional equipment.

-

Virtual Reality (VR) and Augmented Reality (AR): For capturing user movements and interactions in VR/AR environments.

-

Research and Education: In research, education, and simulations of human motion in medical, sports, and other scientific fields.

-

Video Games: For creating realistic animation and simulating natural movements of characters in video games.

Markerless motion capture is particularly suitable for projects that require speed, ease of setup, and lower costs, and it is widely used in fast-paced productions, interactive applications, and budget-conscious projects.

Motion Capture Actor or Digital Actor

A motion capture actor or digital actor is someone who uses motion capture technology to record their body and facial movements in order to create a digital or 3D character for films, video games, or animations. These actors typically perform in a studio while wearing special motion capture suits and equipment (sensors and cameras) to accurately capture all the details of their body and facial movements.

Workflow for a Motion Capture Actor includes:

-

Wearing Motion Capture Equipment:

The actor puts on a motion capture suit equipped with sensors that track precise body movements. These sensors are connected to a computer, which converts the captured movements into digital data. -

Performing Movements and Emotions:

The actor then performs body movements, gestures, and facial expressions on set. In some cases, the actor may perform only physical movements, while in others such as in fantasy films they may need to convey complex emotions through their digital performance. -

Transfer to 3D Model:

The captured motion data is transferred to specialized software to be applied to digital characters. This data is mapped onto a 3D model of the character, allowing the digital actor to move accurately and naturally.

Differences Between Motion Capture Actors and Traditional Actors

A motion capture actor, unlike a traditional actor, focuses primarily on body movements to bring digital characters to life. These actors also need a solid understanding of motion capture technology and processes. While traditional actors perform in physical film sets, digital actors create and perform for animated or digital characters in virtual environments.

Key Differences:

-

Recording Physical Movements and Facial Expressions:

Motion capture actors perform specific physical actions with high precision to digitally simulate them in a virtual world. In addition to body movements, subtle details such as facial expressions and emotions are captured digitally.

Traditional actors, on the other hand, perform on physical sets and focus more on natural and emotional acting, where the camera directly captures their performance. -

Interaction with Technology:

Motion capture actors work directly with specialized technology and equipment (like motion capture cameras and sensors) and often wear suits or devices that capture their body movements.

Traditional actors perform naturally without the need for specialized equipment in the filming environment. -

Famous Examples:

Andy Serkis is a prime example of a motion capture actor. He portrayed Gollum in The Lord of the Rings and Caesar in Planet of the Apes, delivering extraordinary digital performances. His ability to transfer emotion and movement to digital characters has made him one of the most renowned figures in the field.

Stunt Motion Capture and Stunt Performers

In motion capture for stunts (Stunt Motion Capture), motion capture technology is used to accurately record and analyze the movements of stunt performers. This technology is designed to capture complex and high-risk movements performed in films, video games, or animations.

Key Concepts:

-

Stunt Performers:

Stunt performers are trained professionals who execute physical, dangerous, or unusual actions on set. These can include falls, jumps, fights, collisions, or other complex movements that regular actors cannot safely perform. Stunt performers require specialized skills and rigorous training due to the inherent risks of their work. -

Stunt Motion Capture:

In stunt motion capture, performers wear specialized equipment with sensors attached to their bodies. These sensors capture precise movements and convert them into digital data, which can then be applied to 3D models and realistic animations in films or video games. This allows filmmakers to digitally recreate complex stunt sequences with high accuracy and realism.

Applications of Stunt Motion Capture

Film:

Used to create realistic action sequences and stunts, such as jumps, falls, and fight scenes.

Video Games:

Used to simulate realistic movements for game characters.

Animation:

Used to transfer precise movements to animated characters.

Stunt motion capture allows filmmakers, game developers, and animators to digitally reproduce the exact movements of stunt performers, resulting in a more realistic and immersive experience for audiences.

Differences Between Motion Capture Actors and Stunt Performers

1. Purpose and Expertise:

-

Motion capture actors specialize in creating digital characters and simulating precise movements in virtual environments. Their body and facial movements are captured for use in digital animation or video games.

-

Stunt performers are professionals hired to execute high-risk physical actions in films, TV, or other media. Their goal is to perform complex and dangerous stunts that main actors cannot safely perform.

2. Nature of Work:

-

Motion capture actors often work in virtual environments or digital spaces, and may not directly interact with the physical world. Their movements are ultimately applied to digital characters.

-

Stunt performers operate in real-world settings, performing physical actions like fights, falls, jumps, and crashes.

3. Role in Production:

-

Motion capture actors may portray a variety of characters, including animals, fantastical creatures, or imaginary beings, without needing extensive interaction with the real environment.

-

Stunt performers are actively present in action-heavy scenes and require specialized physical training and understanding of real-world physics to safely perform dangerous stunts.

Summary: Motion Capture Actor vs. Traditional Actor vs. Stunt Performer

-

Motion capture actors are performers who work with digital technologies to record their movements for digital characters and virtual environments.

-

Traditional actors perform in real-world settings using cameras and focus on live-action filmmaking.

-

Stunt performers are professionals trained to execute physically demanding and dangerous actions on set, ensuring realistic and high-risk effects for film or television.

Body Motion Capture (BMC)

Body Motion Capture involves recording the full-body movements of a performer, including arms, legs, torso, and head. Typically, this is achieved using optical markers or motion sensors placed on key points of the body. The main goal is to simulate natural human movements, such as walking, running, dancing, and interacting with objects.

Terminology:

-

Body Motion Capture: A general term for capturing human body movements. Sometimes it refers to capturing specific parts of the body but broadly covers full-body movements.

-

Full Body Motion Capture: A more precise term, emphasizing the capture of all body parts, including subtle motions like bending, twisting, and fine-grained limb movements.

Although both terms are often used interchangeably, Full Body Motion Capture highlights the comprehensive nature of the capture process.

Steps in Body Motion Capture

Step 1: Performer Preparation

This step includes all preparatory actions performed before motion capture begins. Proper preparation ensures that the system can accurately record the performer’s movements, which are then used for animation or visual effects.

Key Preparations:

-

Choosing the Right Outfit:

-

Performers wear a specialized motion capture suit that allows sensors or markers to be attached.

-

Suits are typically tight-fitting and smooth to maximize recording accuracy. Examples include spandex suits or other fitted garments without excess folds.

-

-

Attaching Sensors or Markers:

-

Sensors or markers are placed on key points of the body such as wrists, knees, shoulders, and other joints.

-

Markers are usually reflective or transparent caps that cameras track precisely.

-

-

Setting Up Cameras:

-

Motion capture cameras are positioned around the performer to record movements from multiple angles.

-

Ensuring that all cameras can track the markers accurately is crucial for high-quality data capture.

-

Performer Physical and Mental Preparation

Before motion capture begins, the performer must be physically and mentally prepared:

-

Physical Readiness:

-

The performer should be in good physical condition, as the motion capture process may involve complex or physically demanding movements.

-

-

Mental Readiness:

-

The performer must clearly understand what types of movements are required to be accurately captured.

-

This may include training sessions that instruct the performer on how to execute specific movements properly.

-

Preliminary Tests

-

Initial Tests: Before the main recording, one or more preliminary tests are conducted to ensure that all systems are functioning correctly and that there are no issues in data capture.

-

During these tests, the performer performs simple movements, allowing the motion capture system to verify accuracy and functionality.

By completing these steps, the performer is fully prepared to begin the motion capture process, ensuring that all motion data is accurately recorded and ready for use in subsequent production stages.

Stage 2: Camera and Equipment Setup

The camera and equipment setup in the Body Motion Capture (BMC) process is critical, as the accuracy of the system depends heavily on the proper placement of cameras and devices. The setup involves the following steps:

-

Selecting an Appropriate Location:

-

The first step is to choose a suitable environment for motion capture.

-

The space should be large enough for full-body movements and controlled lighting to minimize exposure issues.

-

-

Camera Installation:

-

Specialized motion capture cameras are positioned around the capture space.

-

Cameras should be placed to ensure all parts of the performer’s body are visible from multiple angles.

-

Depending on the system, cameras may be stereo (paired) or single-unit.

-

The distance from the performer must be precisely adjusted to capture high-resolution images, based on camera type, model, and detail requirements.

-

-

Camera Angle Adjustment:

-

Cameras must be adjusted to cover all body angles, ensuring no movement—such as hands or feet—is occluded.

-

-

Installation and Calibration of Additional Sensors:

-

Apart from cameras, other equipment like motion sensors or optical markers must be installed on the performer’s body.

-

Precise calibration is critical to align sensors with cameras for accurate and analyzable motion data.

-

-

Connection to Central System:

-

All cameras and sensors are connected to a central system (usually a computer or server) to collect and process data in real time.

-

This step typically involves launching BMC software, which converts incoming sensor and camera information into digital motion data.

-

-

Testing and Final Adjustments:

-

After setup, initial test captures are conducted to verify that all cameras and sensors are functioning correctly.

-

Any necessary adjustments are made to resolve potential issues and ensure high-precision motion data collection.

-

These steps collectively ensure that camera and equipment setup in BMC is precise, directly impacting the accuracy and quality of motion capture.

Optical Motion Capture System

The Optical Motion Capture system is one of the most widely used methods for recording movements in motion capture technology. It simulates and tracks the motions of humans or objects using special cameras and optical markers. This system relies on optical and imaging features to accurately capture physical movements. Below is a detailed explanation of its operation:

Main Components of Optical Motion Capture:

-

Optical Cameras:

-

These cameras are capable of capturing high-resolution images and videos.

-

Specialized cameras are often used to record fast movements without losing detail.

-

-

Optical Markers:

-

These are specific points placed on the body or object, usually made of reflective or luminous materials.

-

Markers are designed to reflect light or respond to specific lighting conditions for precise tracking.

-

How the Optical Tracking System Works:

-

Marker Placement:

-

Optical markers are attached to key body points, such as joints or other critical areas.

-

They are typically small spheres or light-emitting circles mounted on specialized suits or directly on the skin.

-

-

Camera Tracking:

-

Optical cameras installed around the capture space continuously track these markers.

-

Cameras are often stereo or multiple units placed at various angles to capture movement in all dimensions.

-

Each camera records the marker positions and sends 3D positional data to a computer system.

-

-

Data Processing:

-

Motion capture software uses the incoming data to reconstruct the precise positions and movements of the markers.

-

By combining 3D information from multiple camera angles, the software calculates the accurate movement of the body or object in space.

-

Advantages of Optical Tracking Systems:

-

High Accuracy: Capable of capturing fine and detailed movements, such as finger, head, and facial motions.

-

Non-contact Operation: Unlike systems that require physical sensors, optical systems do not contact the body, providing greater comfort for the performer.

-

Wide Coverage: Can track movements in a large space with multiple markers and cameras.

Challenges and Limitations:

-

Lighting Requirements: Requires adequate lighting for accurate operation; performance may degrade in low-light or complex lighting conditions.

-

Spatial Limitations: Typically needs a controlled space where cameras have clear visibility of the markers; outdoor or crowded environments can reduce accuracy.

-

Marker Interference: When markers are too close or aligned in similar angles, the system may struggle to distinguish and track them correctly.

Applications of Optical Motion Capture:

-

Film and Animation: Capturing actor movements and transferring them to digital characters.

-

Video Games: Simulating natural and realistic movements of characters.

-

Sports and Medical Research: Analyzing body motion in sports or studying injuries.

-

Military and Training Simulations: Virtual training and simulating human behaviors in various scenarios.

Step 3 of the Body Motion Capture Process: Motion Recording

Motion recording refers to the process of capturing accurate body movement data of an actor using specialized technologies. This stage is the most critical part of the BMC process and involves recording the actor’s real movements and converting them into digital data that can be used for animations, video games, or virtual reality projects. The steps of this process are as follows:

1. Starting Motion Capture:

-

After preparing the actor and equipment (suit, sensors, cameras), the motion capture session begins.

-

The actor enters the capture space, typically a controlled environment covered by multiple cameras.

-

The actor may be required to perform specific movements such as walking, running, jumping, or more complex actions necessary for the project.

2. Camera Operation and Data Collection:

-

Motion capture cameras, usually Optical or Inertial systems, precisely record the actor’s movements.

-

In Optical systems, cameras track the reflective markers placed on the actor’s body, capturing their positions in 3D space.

-

In Inertial systems, sensors mounted on the actor’s suit record body position and orientation changes.

3. Capturing Detailed Movements:

-

Motion capture systems can record very precise changes in the positions and movements of body parts, such as knees, elbows, hands, and head.

-

Cameras or sensors continuously collect data, which is then transferred to a computer system for digital storage.

4. Real-Time Motion Simulation:

-

In some cases, the recorded data can be visualized live alongside the actor’s performance.

-

This allows directors and the production team to quickly assess whether movements are being captured correctly.

-

Actors may receive feedback to adjust and refine their motions for greater accuracy.

5. Accuracy and Data Correction:

-

Errors may occur if markers are obstructed or out of the cameras’ view, causing inaccurate recordings.

-

The technical team may ask the actor to repeat specific movements to ensure complete and precise data capture.

6. Data Management and Processing:

-

Once recording is complete, the data is typically imported into specialized software to be converted into digital models.

-

This stage includes data processing and correction to ensure high accuracy and remove noise or potential errors.

7. Completion and Preliminary Review:

-

After the recording session, the captured data is reviewed and verified.

-

This may include checking videos of the recorded movements.

-

If certain actions require re-recording, the actor may return for additional sessions.

Summary:

The motion recording stage is one of the most sensitive parts of the Body Motion Capture process. Any mistakes during this phase can significantly affect the final quality of the project. Therefore, precision and coordination during this stage are absolutely crucial.

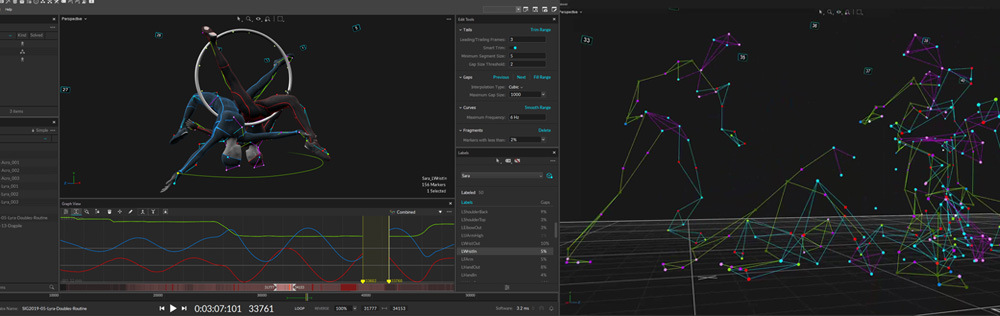

Step 4 of the Body Motion Capture Process: Data Processing

Data processing in motion capture is the procedure in which raw data obtained from tracking systems (optical, inertial, or other types) is converted into usable information for creating digital movements and 3D animations. This processing involves several steps, each with its own importance:

1. Data Acquisition:

-

The first step in data processing is collecting information from the tracking systems.

-

In this stage, sensors or cameras record the positions of physical points or markers.

-

The collected data typically includes 3D coordinates (X, Y, Z) for each marker across different time frames.

-

This raw information is continuously sent from the tracking devices to the computer.

2. Marker Identification and Labeling:

-

One of the main challenges in data processing is the accurate identification of markers.

-

In optical systems, multiple cameras simultaneously record the movements of markers, which may sometimes overlap or occlude one another in certain frames.

-

At this stage, the software must be able to distinguish and label each marker correctly to provide accurate data for each body part or object.

3. Data Cleaning:

-

Raw data often contains noise or errors caused by signal interference, unpredictable movements, or technical issues.

-

During this step, the software attempts to correct these errors and “clean” the data.

-

Techniques for data cleaning may include:

-

Removing incorrect or impossible data, such as extreme jumps or physically impossible movements.

-

Precise corrections, like aligning marker points across frames.

-

Noise reduction using digital filters.

-

4. 3D Reconstruction:

-

After data cleaning, the next step is reconstructing 3D movements.

-

This process involves using the captured data to accurately simulate the position of each marker in space.

-

In optical systems, stereography methods are typically used, which combine information from multiple cameras to reconstruct the 3D positions of the markers.

-

As a result, the exact positions and natural movements of the actor or object are simulated in three-dimensional space.

5. Mapping to 3D Models:

-

In this stage, the 3D motion data of the markers is applied to a digital 3D model.

-

These models usually include digital skeletons representing the bone structure of a human body or other objects.

-

The software can map marker points to the skeleton joints and simulate the movement of the model according to the captured data.

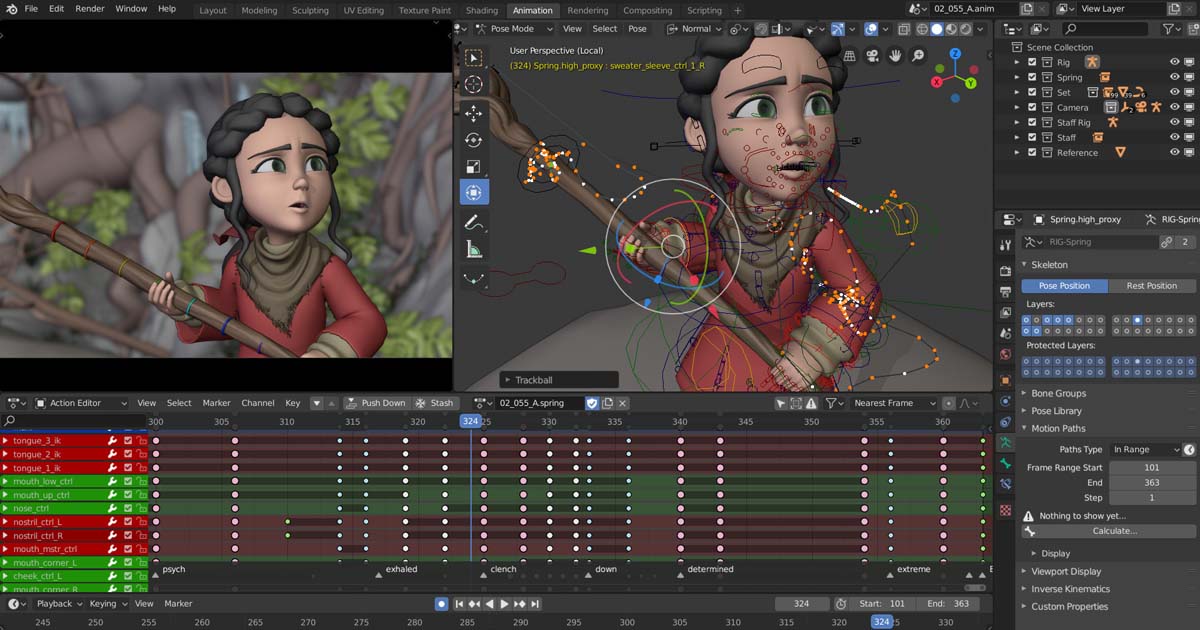

Animation Application

After motion capture data has been mapped to a 3D model, the next step is applying the animation. In this stage, the captured movements are transferred to the 3D model to create animations with natural and realistic motion. These movements can be used as keyframe animations or as part of a dynamic animation sequence in animation software such as Maya, Blender, or 3ds Max.

Refinement and Final Adjustments

Even after applying the captured motions, some details may require further fine-tuning. In this stage, animators or technicians may refine the motion capture data to add more subtlety to the movements. These adjustments can include:

-

Enhancing movement quality, for example, making the motions appear more natural.

-

Adding manual movements or facial expressions that were not directly captured by the system.

-

Correcting minor deviations in the recorded motions.

Final Output and Integration

Once the animation process is complete, the processed data is exported in final formats suitable for use in the target project. These outputs can be applied in:

-

Film production

-

Video games

-

Educational simulations

-

Research projects

Review and Testing

In many cases, after the animations or 3D models are ready, tests are conducted to verify the accuracy and correctness of the movements. These tests may include:

-

Real-time motion reviews

-

Various simulation scenarios

This ensures that the motion data is correctly applied and integrated into the final project.

Step 5: Correction and Optimization

In the Body Motion Capture process, the correction and optimization stage is a crucial step aimed at improving the accuracy and quality of the captured motion data. In this stage, the raw data obtained from sensors or cameras is reviewed and refined to eliminate any errors, inaccuracies, or deficiencies. This process generally includes:

Sensor Error Correction:

Motion capture sensors may produce errors due to signal interference, inaccurate motion tracking, or technical issues. These errors must be identified and corrected to ensure that the captured movements are precise, flexible, and realistic.

Data Alignment:

Especially in multi-camera or multi-sensor motion capture systems, the captured data may not always be perfectly aligned. Data alignment ensures that information from all sensors is correctly synchronized and combined for accurate motion representation.

Motion Smoothing:

Some movements may appear jerky or unnatural. Motion smoothing involves refining the data so that movements appear more fluid and natural. Software algorithms are used to remove unwanted signals, sudden jumps, or glitches in the motion.

Kinematic Error Correction:

This step addresses errors related to human anatomy or skeletal structure, such as unnatural joint bending or unrealistic motion. Specialized algorithms simulate and correct these errors to produce more anatomically correct and natural movements.

Optimization for Rendering or Animation:

If the motion capture data is intended for use in animation or CGI, it is optimized for efficient processing in animation software or video games, ensuring high-speed performance and quality rendering.

Adding Details:

Animators may add subtle details such as hand gestures, facial expressions, and other fine movements to enhance realism and make the motion appear more lifelike.

Goal of this stage:

The ultimate aim is to produce accurate, natural, and fluid motion data that can be realistically applied in any digital environment or animation project.

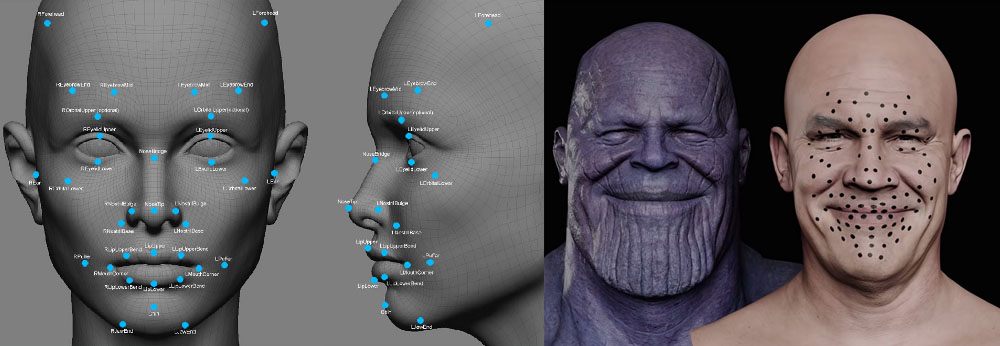

Facial Motion Capture (Face Motion Capture)

Facial Motion Capture (Face Motion Capture) refers to the process of precisely recording a person’s facial movements and transferring them to digital models. This technology is widely used in animated films, video games, and some virtual reality (VR) applications.

In this process, sensors or specialized cameras are placed on the actor’s face to capture subtle muscle movements, such as those of the eyebrows, eyes, mouth, and other facial features. These movements are then digitally processed and mapped onto 3D models, creating realistic and accurate facial animations.

Facial motion capture is utilized across various industries, including cinema, video games, and even medical fields for analyzing facial expressions and movements. One of its most famous applications in Hollywood is the creation of digital characters such as Gollum in The Lord of the Rings or Caesar in Planet of the Apes using this technology.

Recording facial movements is a revolutionary technology with applications in cinema, medicine, and neuroscience. With technological advancements, these systems have become more accurate and efficient, allowing broader applications in multiple industries.

Typically, facial motion capture uses high-resolution cameras and special facial markers, which are temporarily attached to the actor’s face. Some systems, however, bypass markers entirely and rely on computer vision and facial motion analysis to capture expressions indirectly.

This technology is particularly valuable in cinematic animation and video games, enabling the creation of characters with more natural emotions and reactions. Notable examples include films like Avatar and video games such as Hidden Agenda.

How Facial Motion Capture Works

Facial motion capture typically involves a combination of specialized cameras (often high-speed), biometric sensors, and advanced software. The process is generally as follows:

Step 1: Placement of Sensors or Cameras

This is a key step for recording the facial movements of actors or real people. It is particularly important in systems that capture precise and detailed facial expressions.

Placing sensors on the face:

Special sensors—such as markers, dots, or specialized facial cameras—are attached to key points on the actor’s face, including the eyes, eyebrows, nose, mouth, and other areas. These points help the system track subtle facial movements, such as eyebrow raises, mouth shapes, and eye movements, with high accuracy.

Using specialized cameras:

Many facial motion capture setups use multiple cameras that record simultaneously from different angles. This multi-angle approach allows the system to accurately capture three-dimensional data of the facial movements.

Camera calibration and setup:

Cameras must be carefully calibrated to ensure that all facial movements are captured from every angle with minimal error. Proper calibration ensures that even the smallest details of the facial expressions are recorded correctly.

Ensuring optimal environmental conditions:

Lighting and other environmental factors must be controlled to provide clear and accurate images of the actor’s face. The environment should minimize noise or interference that could affect the quality of the captured data.

This step of facial motion capture directly impacts the accuracy and quality of the final animation or visual effects, because all captured movements serve as the primary data for creating realistic and lifelike facial animations in subsequent production stages.

Step 2: Facial Motion Simulation

The Facial Motion Simulation stage involves transferring the captured facial movement data from an actor or real person to digital face models (such as 3D models) to create precise and realistic animations. This stage is particularly important for producing animated characters or visual effects in films and video games.

Capturing facial motion data:

Initially, during the motion capture phase, sensors or specialized cameras record the actor’s facial movements. The captured data includes detailed information about various facial features such as eyebrows, eyes, mouth, and other facial characteristics. These data points can be stored as 3D points or a set of motion parameters.

Transferring data to digital models:

Once captured, the facial motion data is transferred to 3D animation software. Here, the motion capture data is applied to a digital face model—typically a 3D representation of the character. The model is designed to be flexible, allowing it to accurately reproduce a wide range of facial movements.

Processing and precise motion simulation:

Animation software then automatically or semi-automatically maps the captured motions onto the 3D model. These motions can include muscle deformations, skin stretches, mouth movements during speech, and other expressions such as smiling, anger, or fear. Advanced algorithms simulate the interactions between facial muscles and skin to ensure the movements appear natural and lifelike.

Manual corrections and refinement:

Although motion capture data is typically very accurate, some movements may require manual refinement. Animators adjust certain facial motions to enhance details in the animation. This step may include:

-

Correcting motion precision.

-

Synchronizing facial movements with body or hand actions.

-

Adding specific emotional expressions to enhance realism.

Adding Visual Details and Refinement

To make facial motion simulations more realistic, visual details such as lighting effects, shadows, skin deformations (e.g., wrinkles or subtle texture changes) are often simulated with precision. This process is typically performed using graphic and rendering software to ensure the final face appears alive and natural.

Feedback and final adjustments:

At the final stage, after applying all motions and corrections, animators and VFX teams receive feedback from the director or production team. Final adjustments are then made based on artistic and cinematic requirements. These adjustments may include:

-

Modifying the intensity of facial movements.

-

Adjusting the physical behavior of facial features.

-

Enhancing the overall realism of the animation.

As a result, the facial motion simulation stage accurately recreates human facial movements in the digital world, playing a crucial role in producing believable animated characters, cinematic visual effects, or dynamic, human-like characters in video games.

Step 3: Data Analysis

The Data Analysis stage is a critical step where the raw captured facial motion data is transformed into usable information for animation and visual effects. In this phase, the data is carefully examined and analyzed to ensure that facial movements are accurately and realistically simulated. This process is complex and requires attention to detail.

Collection of raw data:

Initially, sensors or cameras capture the actor’s facial movements. These data usually include 3D points (markers) or motion parameters for facial features such as eyebrows, eyes, mouth, and muscle deformations. The data may also include information about position changes, velocity, and direction of each facial point.

Data cleaning:

Raw data from motion capture systems often contain noise or errors caused by technical limitations or environmental factors (e.g., poor lighting or incomplete sensor coverage). During this step, these errors are identified and removed. The process typically involves:

-

Filtering the data to remove anomalies.

-

Correcting deviations to ensure accurate motion representation.

-

Verifying the precision and reliability of all captured information.

Specialized software tools are used in this stage to detect and correct issues, ensuring that the processed data is ready for animation application.

Facial Motion Analysis

In this stage, the captured facial movements are analyzed in detail. This analysis may include examining muscle movements in response to different emotional expressions (e.g., eyebrow movements during anger or mouth shapes during a smile). The goal is to fully understand and simulate natural facial motion.

This analysis helps animators and designers identify which parts of the face require adjustments to make the animation appear more natural and realistic. For example, the analysis might reveal that movements in specific facial regions, such as the nose or chin, were not captured accurately and need correction.

Extraction of motion features:

After cleaning and analyzing the data, key facial motion features are extracted. These features may include detailed information about:

-

Muscle contractions

-

Skin deformations and stretching

-

Facial symmetry changes

These extracted features are then used to create more accurate and lifelike animations.

Mapping data to digital models:

The analyzed data is then transferred to 3D facial models. These data are used to simulate precise facial movements in the digital environment. For example, animators may use the analyzed data to adjust mouth shapes or eye movements, ensuring that the digital character’s facial motions fully match the actor’s real movements.

Corrections and revisions:

Finally, the motion analysis allows the animation team to refine specific facial movements. These refinements may include:

-

Adjusting the speed of movements

-

Improving coordination between different facial regions (e.g., synchronizing eyebrow and eye movements)

-

Applying more accurate physical characteristics (e.g., skin stretch)

Emotion and expression analysis:

In some projects, the analysis focuses specifically on facial expressions and emotions. Facial motion data can help simulate human emotions such as anger, sadness, happiness, and fear more realistically. In this stage, complex models are used to simulate interactions between facial muscles, producing animations that convey subtle emotional nuances.

Advantages of Facial Motion Capture

High Accuracy:

One of the greatest advantages of this technology is its high precision in capturing facial movements. This accuracy helps designers and producers reconstruct facial motions naturally and realistically.

Time and Cost Efficiency:

Instead of relying on actors to perform every facial movement for animations or films, this technology can quickly and accurately record all facial motions, reducing the need for additional costs and production time.

Creating Digital Characters and Robots:

Another important application is in robotics and digital characters, where captured facial movements can be applied to robots or digital avatars, allowing them to interact more naturally with humans.

Challenges and Limitations

High Cost:

Facial motion capture systems can be very expensive, which may limit accessibility for some companies or projects.

Requirement for Controlled Environments:

These systems typically need carefully controlled environments, including proper lighting and other conditions, to achieve maximum recording accuracy.

Sensitivity to Details:

Some subtle facial details, such as movements of small muscles, may not be captured perfectly, which can affect the overall realism.

Role of a Motion Capture Technician

A motion capture technician plays a key role in the production of animations, games, and digital films. This profession requires high technical skills, problem-solving abilities, and the capacity to work within a production team. Given the importance of this technology today, motion capture technicians are essential in delivering high-quality digital content.

The responsibilities of a motion capture technician include several areas:

Equipment Preparation

Installation and Setup:

The technician must install and configure all motion capture equipment, including cameras, sensors, and software systems. This involves positioning cameras, setting up motion sensors, and measuring distances accurately.

Ensuring Proper Functionality:

Before recording begins, the technician must test all systems to ensure they are functioning correctly. This may include checking camera connections, calibrating sensors, and testing signal accuracy.

Motion Capture System Operation

Recording Motion:

The technician must oversee the motion capture process and ensure that all movements of the actors’ bodies or faces, or other characters, are accurately recorded. This may involve the use of specialized cameras that track multiple sensors simultaneously.

Data Management:

The technician must effectively store and manage the captured data. This data should be directly transferred to animation and modeling software so that it can be accurately converted into digital models.

Calibration and Adjustments:

-

System Calibration: Throughout the motion capture process, the technician must continuously calibrate the systems to ensure the accuracy and reliability of the recorded data.

-

Camera and Sensor Settings: Precise adjustment of cameras and sensors is essential to capture motion details. This includes adjusting viewing angles, distances, and depth of field.

Quality Assurance and Accuracy:

-

Quality Control: The motion capture technician must monitor the accuracy of the data during recording and prevent errors such as noise or data interference.

-

Technical Troubleshooting: The technician must quickly identify and resolve technical issues to avoid disrupting the recording process.

Support for Animation and Production Teams:

-

Collaboration with Animators and Designers: The technician must work closely with animators and 3D designers. After capturing the motion, the data must be converted into digital characters, so the technician ensures that the data is compatible with animation software.

-

Maintaining Communication Throughout Production: In long-term projects, the technician must stay in constant communication with other team members and effectively resolve any issues that arise.

Skills Required for a Motion Capture Technician

Technical and Specialized Skills:

-

Familiarity with Motion Capture Equipment: A technician must be thoroughly familiar with various motion capture tools, including specialized cameras, motion sensors, and related software.

-

Deep Understanding of Computer Systems: The ability to work with different software systems to manage data and transfer it to 3D environments requires a solid understanding of computer systems.

-

Calibration and System Setup: The ability to calibrate and configure different systems to capture accurate movements is essential.

Communication and Teamwork Skills:

-

Team Collaboration: The technician must be able to work effectively within a multi-person production team and maintain good communication with other team members.

-

Problem-Solving: In various situations, the technician must have the ability to identify and resolve technical issues within motion capture systems.

Precision and Attention to Detail:

-

High Accuracy: Since even minor errors in recorded data can lead to significant problems in the final production, precision and careful attention to detail are crucial for a motion capture technician.

Workplaces and Projects

Motion capture technicians typically work in animation studios, video game development companies, film studios, and research centers. These roles usually take place in controlled environments with advanced equipment, often including large studios with multiple cameras and complex motion capture systems.

Challenges and Limitations

-

Expensive Equipment: Many studios and projects require high-end motion capture equipment, which can result in significant production costs.

-

Technical Complexity: Motion capture sessions may encounter various technical problems that require highly skilled technicians to resolve.

-

High-Pressure Environments: Many motion capture projects are time-sensitive, and technicians must be able to perform effectively in busy and high-stress conditions.